Series exploring the transition from NISQ to FTQC

Not a month passes by without a landmark quantum computing demonstration with noisy intermediate scale quantum (NISQ) devices. In the span of a decade, we’ve gone from a handful of qubits in a lab to quantum computers with tens or hundreds of qubits that can be accessed by anyone, anywhere in the world, and that can perform tasks that far outstrip the most powerful of supercomputers.

Different from NISQ, another field in quantum computing that has seen exciting recent advances is fault tolerant quantum computing (FTQC). My goal in writing this series is to show how these advances are even more important to the future of quantum computing than recent NISQ demonstrations.

The first part digs deeper into the idea that fault-tolerance is what will unlock the true potential of quantum computing. Subsequent parts will explore how the right roadmaps and architectures can accelerate the path to fault-tolerance; the implications of the first logical qubits and gates that were demonstrated recently; and where we’re headed – the fault-tolerance stack, modularity and beyond.

This series is in collaboration with the GQI team. An executive summary is available on the quantum computing report website.

Part-I: Fault-tolerance will bridge the 10000X gap to transformational applications of quantum computing.

Executive summary

Fault tolerance means that we can design quantum circuits to be reliable despite inevitable imperfections in the quantum bits (qubits) and gates. In fault-tolerant quantum computers, the reliable ‘logical’ qubits and gates that run the user’s algorithm in turn comprise a large number of noisy ‘physical’ qubits and gates. This large number of physical qubits and gates connected and controlled in the right manner is what allows turning noisy quantum devices into reliable computing machines.

This post is on how the possibility of computing reliably allows fault-tolerance to deliver the true promise of quantum computing – the promise of not only scientific breakthroughs and faster drug development cycles, but also new materials for batteries and new methods for fertilizer manufacture and carbon capture. These ‘transformative applications’ offer a revolutionary impact on society and the economy, which is why I focus on these applications in this post.

But why is fault-tolerance needed for these transformative applications? That’s because even the least demanding among these requires quantum circuits with millions of gates. Only a fault-tolerant quantum computer can run circuits with this many gates without the output turning into a structureless stream of random numbers.

The gate and qubit requirements of these transformative applications have been an active area of research in the last five years with contributions from heavy hitting teams in academia and industry. There has been strong contribution from academic teams in Caltech, ETH, MacquarieU, UMaryland, USherbrooke, UToronto, UVienna, UWashington to name a few; teams building quantum hardware such as those from Google, Microsoft, PsiQuantum, Xanadu; and finally teams exploring their use such as those from BASF SE, Boehringer Ingelheim, Mercedes-Benz, Volkswagen. These so-called resource estimates are providing a concrete target for future fault-tolerant quantum computers in terms of the number of logical qubits and the number of logical gates to be applied on them.

Here’s a simplified table summarizing the requirements for the number of gates required for the transformative applications mentioned above.

| Application | Gate counts | Qubit counts | Refs. |

|---|---|---|---|

| Scientific breakthrough | 107+ | 100+ | 1, 2, 3 |

| Fertilizer manufacture | 109+ | 2000+ | 4 |

| Drug discovery | 109+ | 1000+ | 5 |

| Battery materials | 1013+ | 2000+ | 6, 7 |

Unfortunately, running circuits with these many gates is just not possible with today’s NISQ devices, which are too noisy to run more than a few hundred gates before their output is no longer reliable and useful. Even if noise mitigation techniques are applied, these will require too many repetitions to be feasible and often involve an unfeasibly large number of experimental runs and leverable extrapolation-like methods whose output cannot be trusted because of large and often uncontrolled error bars in the output.

The challenge is in going from the less than 100 gates that can be applied on today’s NISQ devices to 10’s of millions needed for the transformative scientific applications and billions needed for the transformative commercial applications. Fault-tolerance is what will allow us to bridge this 10000X gap and that’s why many of the strongest teams in quantum computing are working on building fault-tolerant quantum computers.

Bridging the 10000X gap is something that will need progress in three areas: first, the algorithms that are already very sophisticated need to get even smarter and allow performing the same calculations with fewer gates. As discussed below, we’ve already seen multiple orders of magnitude reduction in the costs of several of the transformative applications. Second, the hardware needs to get better so that fault-tolerance is even possible. And finally, we need better fault-tolerance architectures, which tackle imperfections in real hardware and allow squeezing out maximum logical performance from the hardware. These last two points will be the focus of subsequent posts in this series. If we’re able to make substantial progress in these three areas, then it’s only a matter of time before quantum computing delivers the revolutionary impact to society and economy that we are all looking forward to!

Exploring quantum computing’s transition from NISQ to fault-tolerance

Not a month passes by without a landmark quantum computing demonstration with noisy intermediate scale quantum (NISQ) devices. In the span of a decade, we’ve gone from a handful of qubits in a lab to quantum computers with tens or hundreds of qubits that can be accessed by anyone, anywhere in the world, and that can perform tasks that far outstrip the most powerful of supercomputers.

Different from NISQ, another field in quantum computing that has seen exciting recent advances is fault tolerance. My goal in writing this series is to show how these advances are even more important to the future of quantum computing than recent NISQ demonstrations.

The first part, which you’re reading now, digs deeper into the idea that fault-tolerance is what will unlock the true potential of quantum computing. Subsequent parts will explore how the right roadmaps and architectures can accelerate the path to fault-tolerance; the implications of the first logical qubits and gates that were demonstrated recently; and where we’re headed – the fault-tolerance stack, modularity and beyond.

Before we dive into fault-tolerance, let’s begin by taking a moment to understand the present state of quantum computers in the NISQ era in terms of the circuits that can be run on these devices.

Current NISQ devices run tens of gates before turning into random number generators

Devices produced by hardware manufacturers today are classified as NISQ because they include tens or low hundreds of noisy qubits. These devices stand at the forefront of human engineering, and building these devices plays a vital role in our journey towards realizing the full potential of quantum computing. Impressive as these devices are, they cannot yet function as reliable computers, that is, ones that accurately solve the problem we want solved.

Often, conversations about the limitations of NISQ devices center on the number of qubits. However, another significant factor affecting their utility is the number of gates that can be executed. That’s because the commercially relevant applications of quantum computers need circuits with a large number – tens of millions – of gates as mentioned in the executive summary. Unfortunately, in the current NISQ-era computers, only tens or, in the best-case scenario, hundreds of gates can be run on these computers before their output becomes scrambled. This happens because each gate acting on the computer also adds a little bit of noise to the quantum state. Acting more and more gates leads to the noise increasing exponentially with every additional gate till the result is dominated by the noise.

A case in point is the Sycamore chip that Google used in its quantum supremacy demonstration1 in 2019. The experiment involved 53 qubits and 20 noisy two-qubit gates, which dominate the errors on the device. The biggest supercomputers would struggle to simulate Sycamore’s output after these 20 gates. However, the output’s fidelity to the target state was a mere 0.002 — in essence, after 20 gates, the device functioned 2 parts as a quantum computer and 998 parts as a die, basically a uniform random number generator. Indeed, the 2 parts in 1000 of the output were sufficient to beat the supercomputers, but it will be a while before the outputs from these devices are reliable and useful.

This observation isn’t meant to criticize Google’s experiment, which was indeed a commendable achievement. Rather, it’s to highlight that the NISQ era is a dangerous place for quantum information: with tens of gates even with over 99% fidelity acting on tens of qubits, the quantum information can quickly deteriorate. As we make the circuits deeper, the problem gets worse – the overall fidelity drops further and the output becomes closer and closer to a stream of near-structureless random numbers.

The inherent unpredictability of NISQ devices means that there is no way to guarantee that their output is correct. The output of a small scale device is straightforward to verify if it comprises fewer than 50 or so qubits and a similar number of gates. That’s because we can simulate the behavior of these qubits on ordinary classical computers.

Such verification is simply not possible as we scale up beyond the reach of classical computers. In this regime, especially if a large number of gates is acted on the device, the probability of landing on the correct answer plummets since there are many more ways in which the computation can go wrong. This high likelihood of failure, coupled with the lack of a verification mechanism, significantly undermines the reliability of computations performed by a NISQ device.2

But how does this bleak picture reconcile with the promise that quantum computing will revolutionize major industries? Let’s now look more closely into the requirements for useful quantum computing – what are the algorithms that will enable these breakthroughs and how many qubits and gates do these algorithms need in order to provide useful results? And finally why is fault-tolerance necessary for realizing this promise?

Quantum simulation will power the first applications that will transform the society and the economy

Quantum computers hold the potential to outperform classical computers in numerous tasks. Among the most important of these tasks for commercial applications is quantum simulation, which is a kind of a ‘virtual chemistry lab’. In this virtual lab, we can model and investigate the behavior of quantum systems, such as molecules or materials, whether natural or man made.

Quantum simulation is likely to be the first task to deliver tangible economic and societal benefits. This is because quantum simulations involve the modeling of other quantum systems, something that quantum computers are adept at. More concretely, the computational costs for other quantum computing applications with a concrete commercial impact are higher, e.g., the costs of factoring via Shor’s algorithm studied by researchers from Google3 and PsiQuantum4. So it is reasonable that the first transformational applications with a significant commercial and societal impact will come from quantum simulation, which is the focus of the rest of this post.

The user of the quantum simulation virtual lab provides a description for a quantum system, such as how the molecule’s electrons and atomic nuclei interact among themselves. The lab then uncovers important properties of the quantum system including the energy values of the common configurations that this quantum system can achieve through virtual experiments. These configurations comprise the few most stable conditions of the system. The energy configurations of molecules and materials hold the key to advancements in several fields. For instance, in chemistry, they reveal how reactions occur, helping come up with ideas for designing efficient catalysts. In pharmaceuticals, they can predict how a drug molecule interacts with its target, aiding in designing more effective drugs. And in material science and nanotechnology, these energy states determine the properties of materials, from electrical conductivity to chemical reactivity.

The lab primarily focuses on two areas: molecules, like those in pharmaceutical drugs, and materials, like those used in electric vehicle batteries. Simulating molecules involves dealing with the regions in space where electrons are most likely to be found, called ‘electronic molecular orbitals.’ Although simulating more orbitals can enhance the accuracy, it also adds to the computational cost. For materials, the challenge is even bigger because they comprise far more electrons than can be simulated directly. But, by focusing on the lattice of repeating patterns of atoms in the material, we can efficiently determine properties of the entire material.

In order to obtain energy levels with a desired level of accuracy, the simulations should capture a suitable number of energy levels and also they should do so with a suitable level of accuracy. Obtaining more accurate results from the lab requires more qubits and more gates. So a balance needs to be struck in terms of finding the most efficient quantum circuit that provides the desired level of accuracy in the energy values that the user needs.

This brings us to a main challenge: all these requirements imply significant computational costs - we’re talking about tens of millions of gates acting on hundreds of qubits. This is clearly not possible with NISQ devices. That’s where fault-tolerance comes in.

For fault-tolerance we need to build fault-tolerant ‘logical qubits’ from thousands of ‘physical qubits’. And effective ‘logical gates’ on these logical qubits can be performed by applying a large number of gates to these physical qubits. These logical qubits exploit this redundancy to hide the relevant information into intricate superpositions that spread over thousands of physical qubits and that are immune from noise acting at the physical level. Designing these intricate combinations is the science and art of fault tolerance.

Thinking about the requirements again, billions of physical gates applied to hundreds of thousands of physical qubits5 – which is the same as tens of millions of logical gates applied to thousands of logical qubits – that is what is needed to deliver the true promise of quantum computing.

Motivated by the promise of the virtual lab, researchers from industry and academic teams have identified potentially transformational applications from several fields. Powerful studies explore the qubit and gate costs for these applications and aim to bring these costs down. In the realm of fertilizer manufacture, for instance, quantum simulations are being considered for the study of the nitrogen-fixing FeMoCo molecule. The aim is to emulate its energy-efficient process at an industrial scale to reduce energy consumption, a critical challenge in current manufacturing methods. Similarly, quantum simulation algorithms have been considered to develop new methods for carbon capture. Quantum algorithms are being considered for the study of advanced battery design, promising increased energy density and longer lifespan. Pharmaceutical research is another area where quantum simulations are expected to be transformational, aiding in faster drug development by providing precise in-silico representations of molecular properties and reactions. Let’s now look at some of these studies in more detail to get a sense of the qubit and gate costs associated with the applications.

New methods for fertilizer manufacture and for carbon capture

One of the most promising applications of quantum simulation is to help develop new and more efficient methods for fertilizer manufacture. This area is interesting because the current industrial processes for producing fertilizer require high pressure, high temperature, and substantial energy consumption. In contrast, plants perform a comparable task, nitrogen fixation, under room temperature and pressure, while utilizing far less energy. The FeMoCo molecule, a key component in this natural process, can provide more insight into how plants achieve this feat and how we could learn from it for designing better fertilizer manufacturing processes. This molecule has been extensively studied and is now considered a benchmark task for quantum simulations. Let’s take a brief look at some of the recent works that have looked into the costs of simulating FeMoCo.

- A 2016 work6 from Microsoft and ETH provided a comprehensive discussion on how quantum simulations could be used to understand the reactions such as those involved in nitrogen fixation. The work showed that using quantum simulations to perform ground state calculations could help identify intermediate molecular structures and all steps involved in the reaction, thereby providing a comprehensive understanding of the chemical mechanism.

- Fast forward to 2019, a study7 by researchers from Google, Macquarie University, and Caltech introduced an efficient algorithm for simulating molecules such as FeMoCo. Their estimates indicated that simulating the active space of the FeMoCo molecule might require roughly 1011 Toffoli gates acting on millions of physical qubits.

- The following year, in 2020, another study8 from Google, Pacific Northwest National Laboratory, ColumbiaU, MacquarieU, and UWashington introduced an algorithm that could efficiently simulate arbitrary molecules. The authors analyzed the performance of the algorithm which showed that useful simulations required over 2000 logical qubits and more than 109 gates.

In addition to nitrogen fixation, there has also been some work on carbon capture to address climate change. A study9 in 2020 by Microsoft and ETH estimated the computational costs of determining the ground state energies of several different structures involved in catalysis of atmospheric carbon into methanol. These computations call for approximately 1010–1011 Toffoli gates and roughly 4000 logical qubits.

Designing new batteries:

Improvements in rechargeable batteries, which are used in everything from electric vehicles to smartphones, could have significant economic and environmental benefits. Therefore, new battery designs that offer increased energy density, longer lifespan, and faster charging times are highly sought after.

Quantum simulation algorithms can assist in studying these attributes for new battery designs, including new materials for cathode and anode components, and innovative molecules for electrolytes. A few teams have studied in-depth how quantum simulations might propel new battery designs.

- In 2022, a collaboration between PsiQuantum and Mercedes-Benz10 explored using quantum simulations for studying the electrochemical reactions in battery electrolytes. While substantial portions of the resource estimations focussed on the photonic architectures, it does suggest that T-gate depths exceeding 109 are necessary.

- In a 2022 study11 Xanadu, Volkswagen and other research groups provided a detailed step-by-step procedure for estimating the ground state energies of an important battery cathode material: dilithium iron silicate. They estimated that these simulations would require more than 2000 logical qubits and over 1013 Toffoli gates.

- In a more recent follow-up work12 from 2023, Xanadu and Volkswagen improved the algorithms for simulating lithium excess cathode materials, which show promise for new high-energy density batteries. The authors reported that with this improved algorithm, even for simulating the more demanding materials a similar number of over 2000 logical qubits and 1013 Toffoli gates are needed.

- Another recent advance from this year is by a team from Google, QSimulate Inc., BASF, and MacquarieU, who proposed an improved method for simulating materials. They applied this to compute the cathode structure of Lithium Nickel Oxide (LNO) batteries, which are considered a potential replacement for current automotive batteries. These simulations were found to need more than 1012 gates acting on over 107 physical qubits.

While these developments are indeed promising, there could be significant challenges in actually bringing these new materials into a state suitable for mass production; this could reduce the quantum advantage to very little premium. Nevertheless, given the significant impact that batteries are expected to have on the future of energy and transportation, any small premium will likely be worth the effort.

Speeding up drug development

As previously mentioned, quantum simulations aid in comprehending the dynamics of chemical reactions. They accomplish this by determining the ground state energies of various reactants and intermediates involved in the reaction. It is no surprise then, that quantum simulations could facilitate the prediction of both desired and undesired effects of pharmaceutical drugs. And that is how leveraging robust simulations could speed up the drug discovery process, as they furnish precise in-silico representations of chemical properties13.

Several recent studies have estimated the quantum computational costs of simulating molecules that are relevant to drug development. Let’s review some of these studies:

- In 2022, a collaboration between Google, Boehringer Ingelheim, and QSimulate14 examined the simulation of cytochrome P450, a human enzyme critical to the metabolism of many marketed drugs. The work demonstrated that to execute the ground state energy calculations, a computational requirement of more than 109 gates applied to over 103 logical qubits would be necessary.

- Later that same year, researchers from Riverlane and Astex Pharmaceuticals undertook a study15 of Ibrutinib, a medication used for treating non-Hodgkin lymphoma. They calculated the resources necessary to study the drug using different methods, determining that non-trivial computations would need T gate counts of over 1011 acted on over 106 physical qubits.

- Recently in 2023, building on prior work16 by Google, Boehringer Ingelheim and others, PsiQuantum, QC Ware, and Boehringer Ingelheim introduced17 a method that enables direct calculation of molecular observables, as opposed to merely computing ground states. These advancements are crucial for drug design, as it’s helpful to calculate molecular forces to simulate molecular dynamics. The researchers estimated that this algorithm would need to run over 1014 gates on thousands of logical qubits to produce meaningful results.

While these works provide strong evidence that future fault-tolerant quantum computers could simulate these particular systems, recent work18 has also studied whether all quantum systems could be amenable to an exponential advantage. The answer is in the negative although the authors note that, in the context of general quantum systems, quantum computers may still prove useful for ground-state quantum chemistry through polynomial speedups.

Scientific breakthroughs

Many works have considered the simulation of quantum mechanical models that are relevant in open scientific problems including those that could help understand and design high-Tc superconductors.

- For instance, in 2018, a team from Maryland demonstrated that simulating the time evolution of the spin-½ Heisenberg chain requires about 107 single and two-qubit gates, given the parameters n=t=100 and an approximation error of 0.001.19

- Later, a 2020 collaboration between Microsoft and USherbrooke20 focused on the quantum simulation of the Hubbard model, which is crucial in the study of High-Tc superconductivity. They discovered that preparing the ground state of a 100 qubit Hubbard model requires around 107 gates.

- Since 2011, quantum field theory simulations have emerged as a field where quantum simulations could aid scientific discovery. The development and enhancement of algorithms for fault-tolerant quantum computers have become a particular focus in this area.21

- A 2019 paper22 by Google, Harvard, Macquarie, and the University of Toronto showed that a careful study of the errors arising in different subroutines of quantum simulation can make the simulations substantially more efficient. The work considered the simulation of simple solid-state materials such as lithium hydride, graphite, diamond, and two paradigmatic solid-state physics models: uniform electron gas model and the Hubbard model. Simulations of these systems could be performed with 107–109 gates acting on 105–106 physical qubits. These numbers are well beyond what is possible with the state of the art but seem more achievable than quantum chemistry simulations so these might provide the first breakthrough applications of quantum simulations.

The algorithms behind these applications are sophisticated and are getting better steadily

Given the promise of these applications and also the daunting costs of implementing them, it is no surprise that several teams of researchers are investing substantial effort into developing improved algorithms for simulating molecules and materials with greater accuracy. In this process, each individual element of these algorithms has been refined. And meticulous attention has been devoted to optimizing the interaction between different elements. In particular, errors arising from the approximations made within these algorithms have been identified and the costs associated with reducing these errors have been systematically optimized.

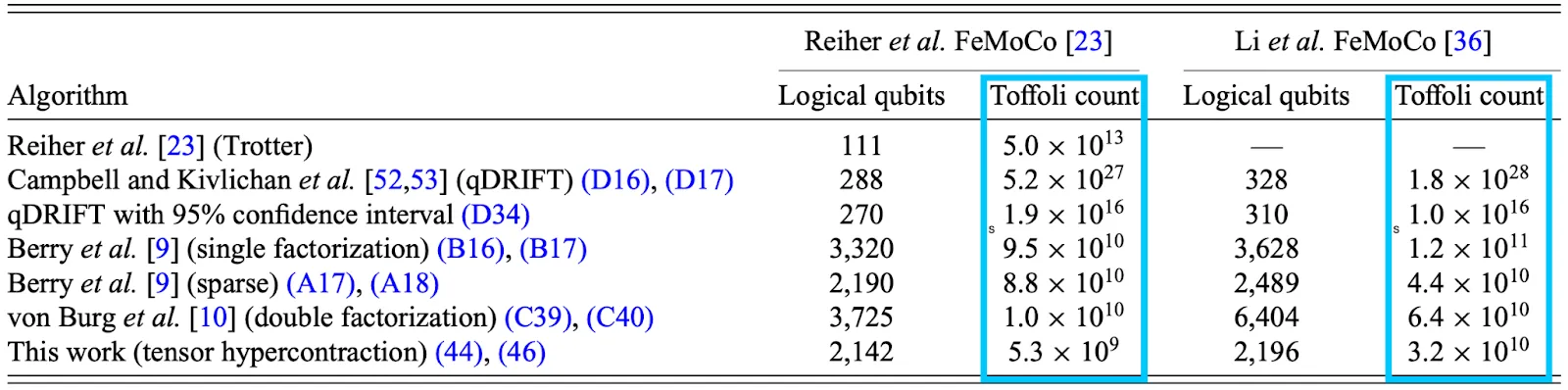

The outcome of this ongoing process is a succession of increasingly advanced algorithms that provide continually decreasing costs. To get a feeling for the innovation that goes into the field, take a look at this table from a recent paper,23 which highlights the progressive reduction in the count of qubits and gates required, and the innovations behind these cost reductions.

The following table from the same work shows the reduction in the gate count that these innovations have achieved towards the FeMoCo benchmark that we looked at earlier.

The progress in a relatively short time frame is nothing short of remarkable. There’s good reason to anticipate that this trend of improvements will persist as we move closer to the development of fault-tolerant devices capable of running these sophisticated algorithms.

Summing it all up

We’ve seen how numerous teams of researchers have looked closely at the costs of performing useful simulation for different molecules and materials. The assessments for meaningful quantum simulations consistently reveal massive computational costs – astronomical numbers of gates acted on equally large number of qubits. Ideally, the costs for these algorithms would be less, but unfortunately, they aren’t.

We’ve seen a rapid reduction in the cost of running these algorithms but we’re still a factor of 10,000 away from the number of gates that are needed for useful quantum computation. The only plausible method to achieve these transformative applications of quantum computing is through the use of fault-tolerance.

That’s why several hardware teams are working on building fault-tolerant quantum computers, one step at a time. In the rest of this series, let’s look in more detail at these efforts – what fault-tolerance architectures and roadmaps are these teams following; how the first demonstrations of fault tolerance are already here; and what all this means for the future of quantum computing.

Summary

- There are several transformative applications of quantum computing – computational tasks that are of immense commercial and societal value that are efficiently solvable on a quantum computer but that are infeasible for classical computers.

- Different transformative applications require circuits of different size, i.e., with different numbers of qubits and different numbers of gates acting on these qubits.

- Among the least demanding transformational applications of quantum computing is quantum simulation – a tool that allows quantum computers to simulate the properties of molecules and materials. Quantum simulations could enable scientific breakthroughs, faster drug development, and new materials for batteries, the manufacture of fertilizer, and carbon capture.

- While the number of qubits required for useful quantum simulation is only a factor of 10 or so more than what is available today, the number of gates required is 10,000x or more away from today’s best NISQ devices.

- The only way to get to these gate requirements is through fault-tolerance, by assembling larger noisy circuits into smaller noiseless circuits.

- Although we’re orders of magnitude away from the scale needed for transformational applications, the costs are coming down fast. As we see in the next posts, there is rapid progress in terms of hardware and architectures on the path to fault tolerance.

Acknowledgements:

Thanks to Shreya P. Kumar, Varun Seshadri, Rachele Fermani and André M. König for helpful comments and suggestions.

Up Next

Upcoming posts in this series will dive deeper into the current efforts towards fault-tolerance including roadmaps for building FTQCs, the first demonstrations of logical qubits in hardware, and the implications of the transition from NISQ to FTQC.

1. Quantum supremacy using a programmable superconducting processor | Nature 574, 505–510 (2019) 2. The situation remains unchanged even if the recently developed and implemented error mitigation techniques are used. In particular, the high likelihood of failure and the lack of verifiability are only marginally mitigated by these. There is still little in the way of proving that the output obtained from NISQ devices is correct. 3. [1905.09749] How to factor 2048 bit RSA integers in 8 hours using 20 million noisy qubits 4. [2211.15465] Active volume: An architecture for efficient fault-tolerant quantum computers with limited non-local connections and [2306.08585] How to compute a 256-bit elliptic curve private key with only 50 million Toffoli gates 5. This discussion assumes certain overheads – number of physical qubits needed to make a logical qubit and the number of physical gates that need to be applied in order to run logical gates. The overheads are very sensitive to the type and amount of noise present at the physical level. 6. [1605.03590] Elucidating Reaction Mechanisms on Quantum Computers 7. [1902.02134] Qubitization of Arbitrary Basis Quantum Chemistry Leveraging Sparsity and Low Rank Factorization 8. [2011.03494] Even more efficient quantum computations of chemistry through tensor hypercontraction 9. [2007.14460] Quantum computing enhanced computational catalysis 10. [2104.10653] Fault-tolerant resource estimate for quantum chemical simulations: Case study on Li-ion battery electrolyte molecules 11. [2204.11890] Simulating key properties of lithium-ion batteries with a fault-tolerant quantum computer 12. [2302.07981] Quantum simulation of battery materials using ionic pseudopotentials 13. For more details, see this perspective put togethers by authors from QC Ware, Google, Boehringer Ingelheim, BASF, UToronto, and UVienna: [2301.04114] Drug design on quantum computers 14. [2202.01244] Reliably assessing the electronic structure of cytochrome P450 on today’s classical computers and tomorrow’s quantum computers 15. [2206.00551] A perspective on the current state-of-the-art of quantum computing for drug discovery applications 16. 2111.12437 Efficient quantum computation of molecular forces and other energy gradients 17. 2303.14118 Fault-tolerant quantum computation of molecular observables 18. [2208.02199] Is there evidence for exponential quantum advantage in quantum chemistry? 19. 1805.08385 Faster quantum simulation by randomization 20. 2006.04650 Resource estimate for quantum many-body ground-state preparation on a quantum computer 21. Some of the references include 1111.3633 Quantum Algorithms for Quantum Field Theories, 1112.4833 Quantum Computation of Scattering in Scalar Quantum Field Theories, 1404.7115 Quantum Algorithms for Fermionic Quantum Field Theories and 2110.05708 Nearly optimal quantum algorithm for generating the ground state of a free quantum field theory 22. 1902.10673 Improved Fault-Tolerant Quantum Simulation of Condensed-Phase Correlated Electrons via Trotterization 23. Lee et al. PRX Quantum 2.3 (2021): 030305. DOI: 10.1103/PRXQuantum.2.030305 (CC BY 4.0 license) [2011.03494] Even more efficient quantum computations of chemistry through tensor hypercontraction